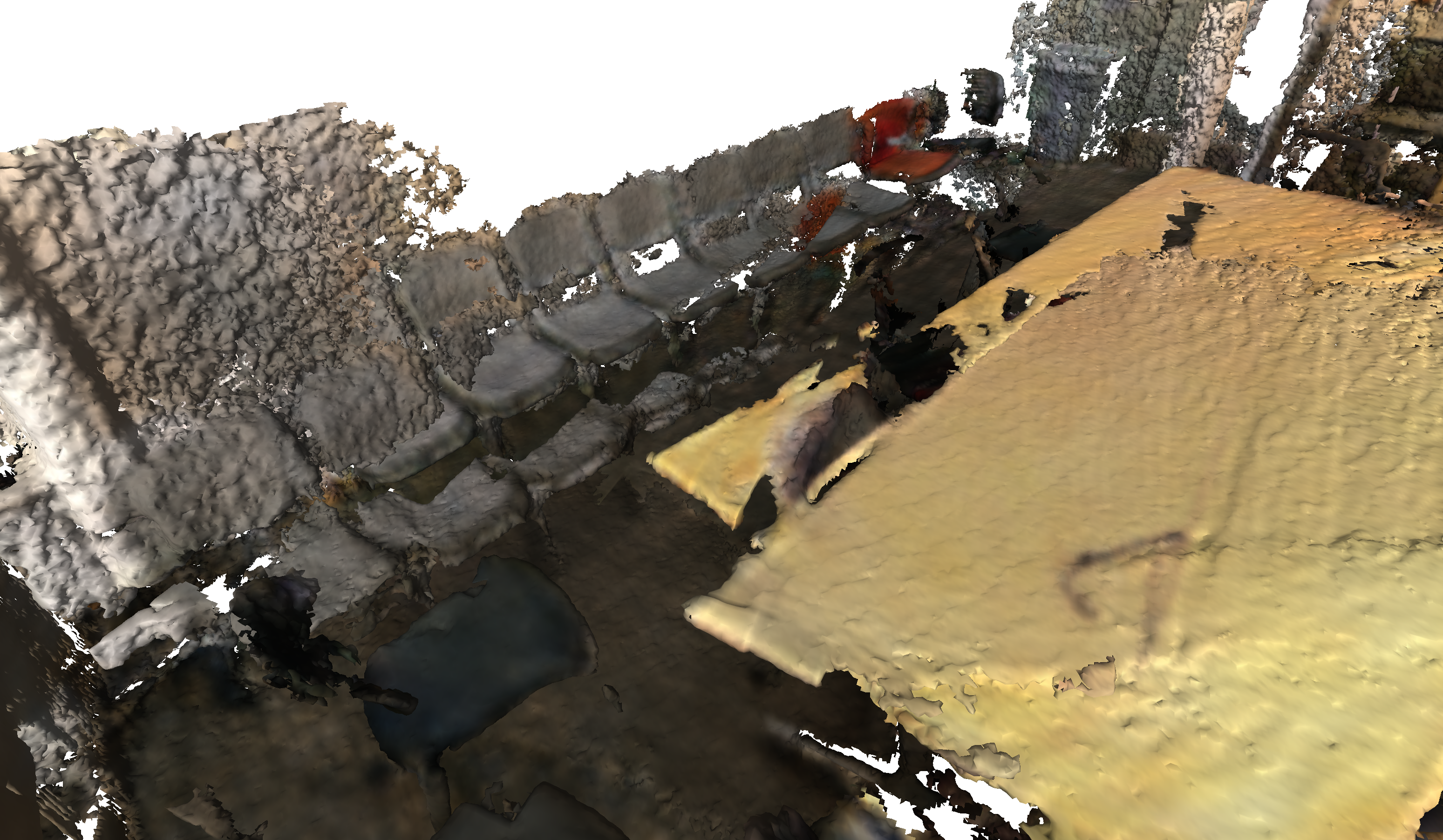

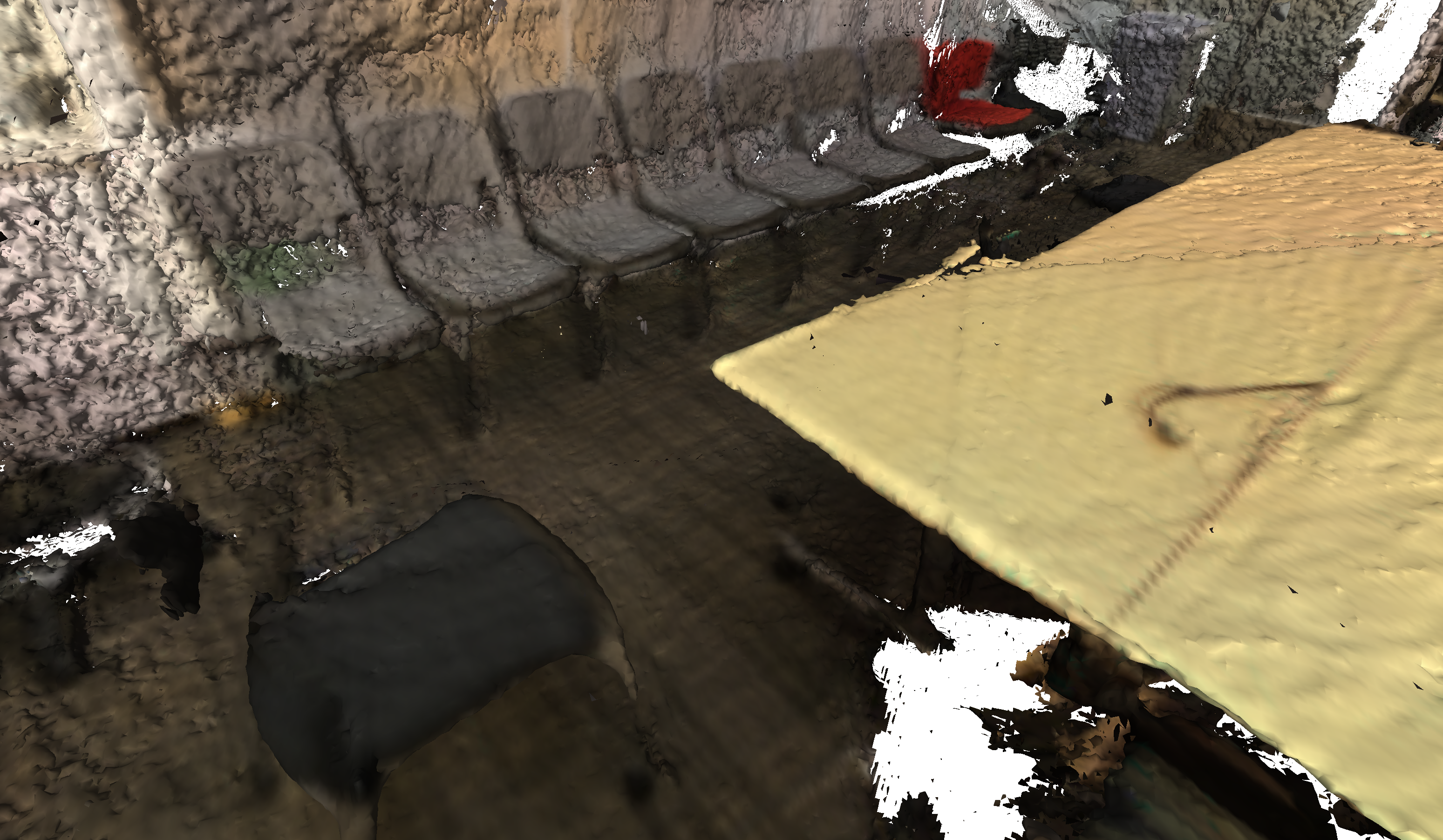

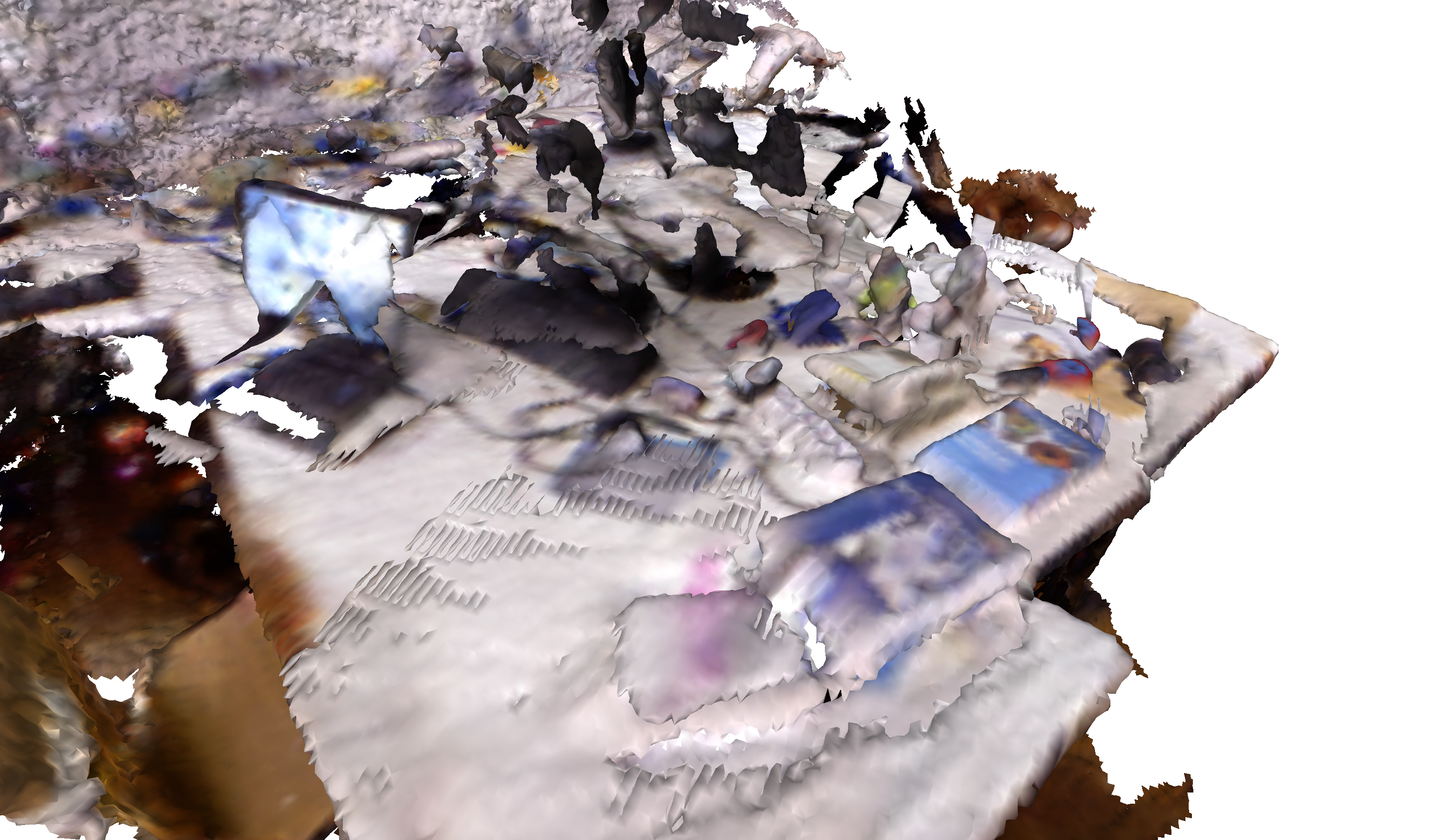

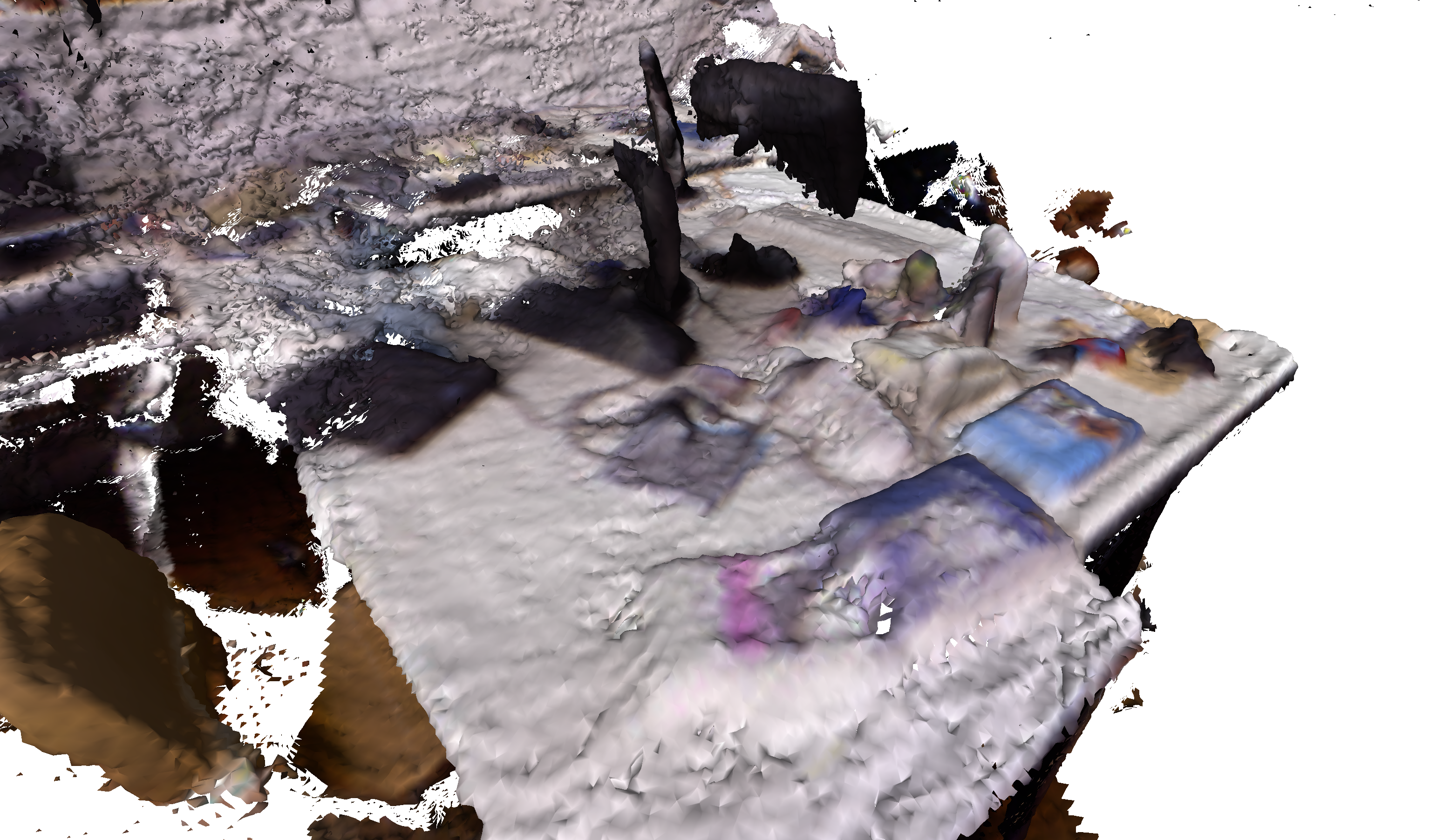

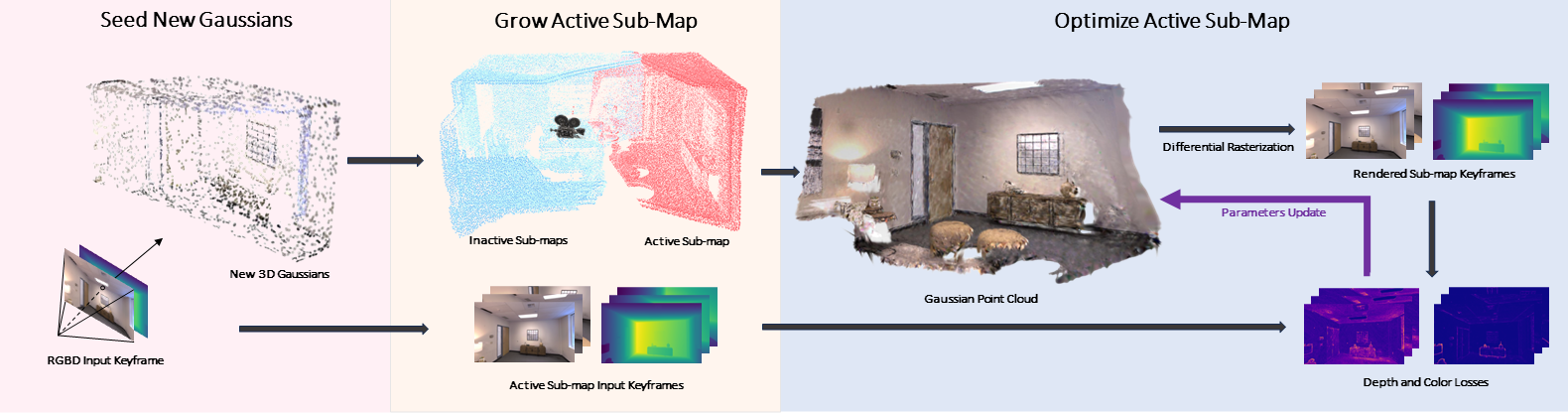

Method Overview

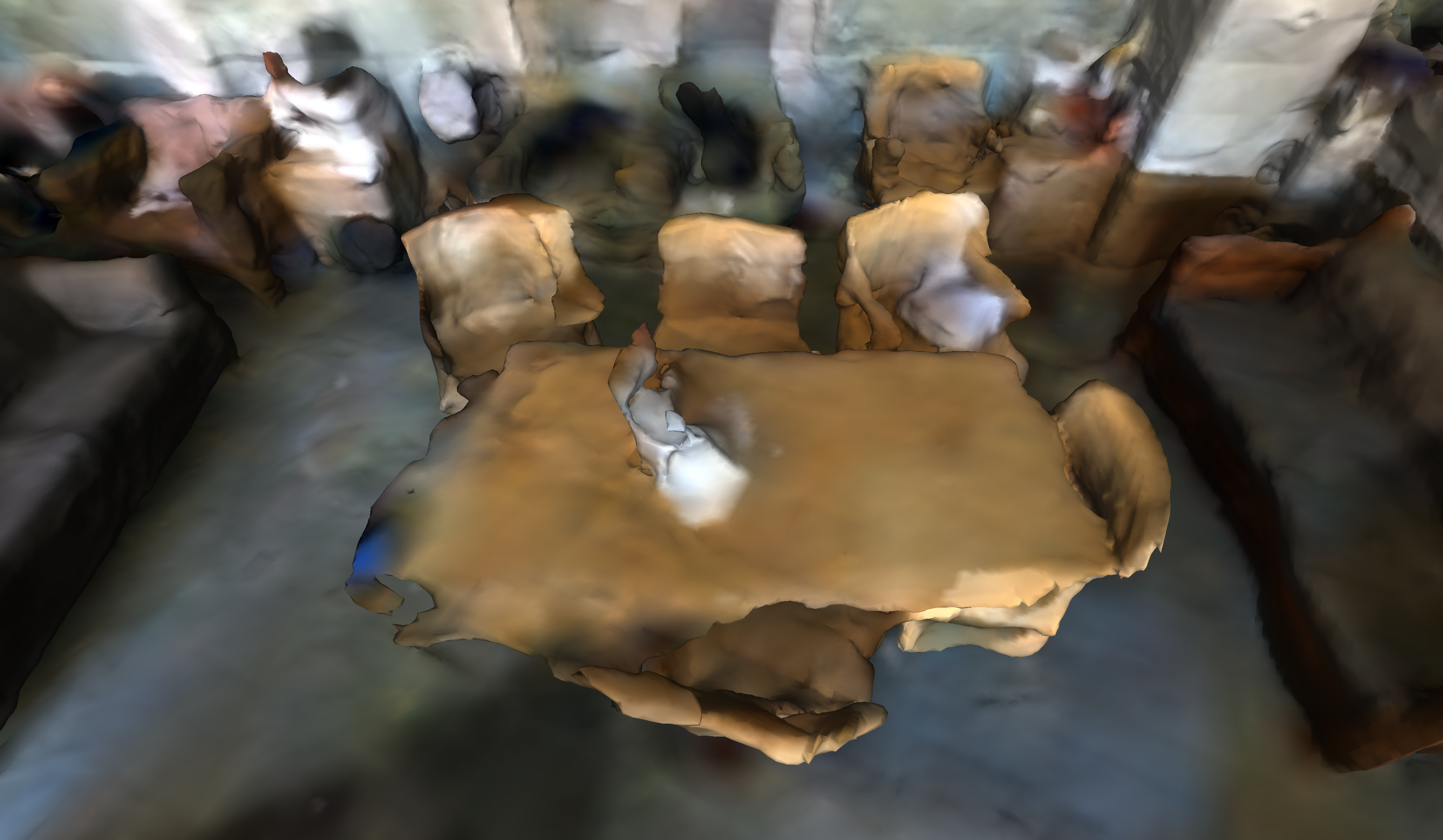

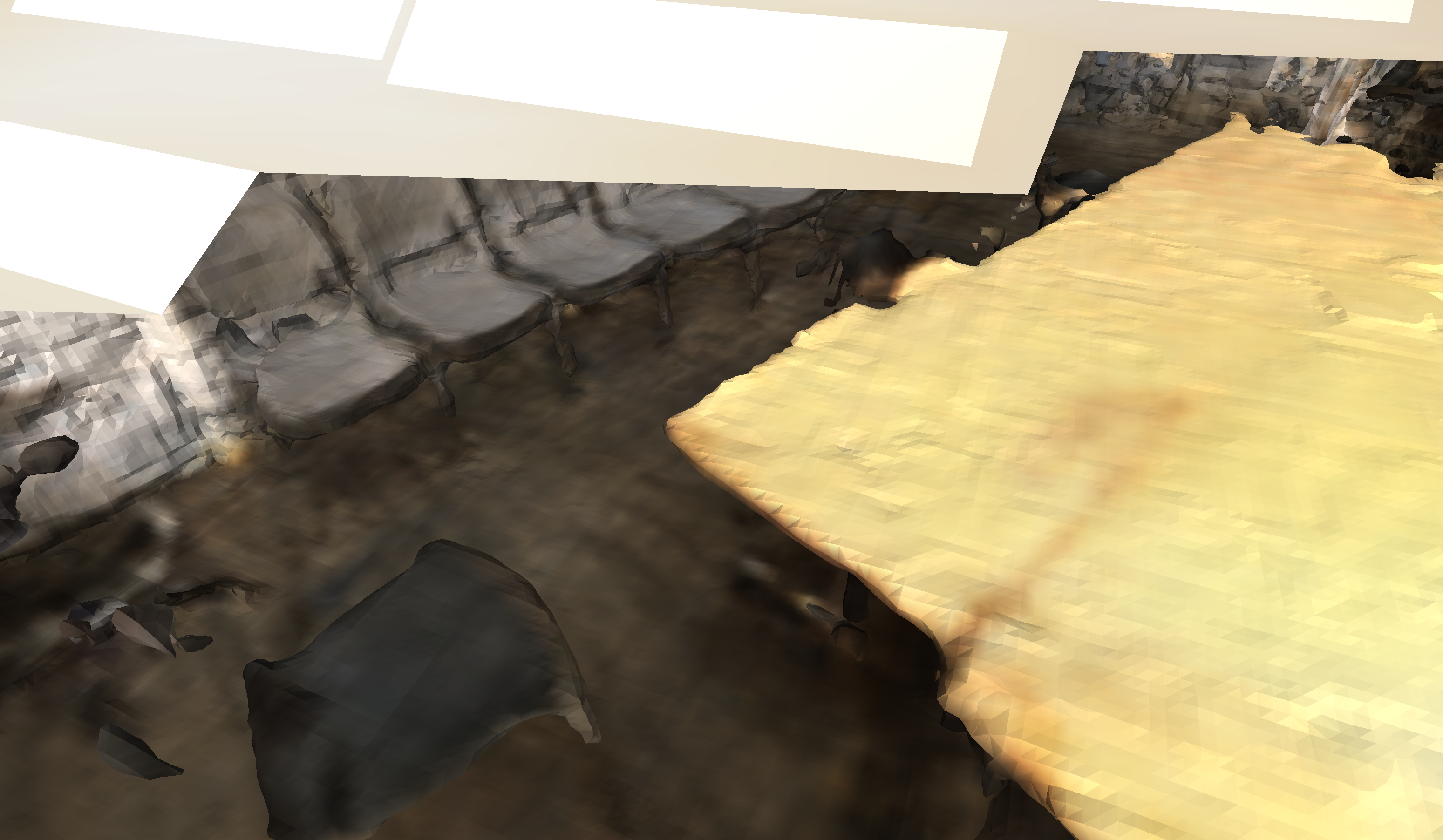

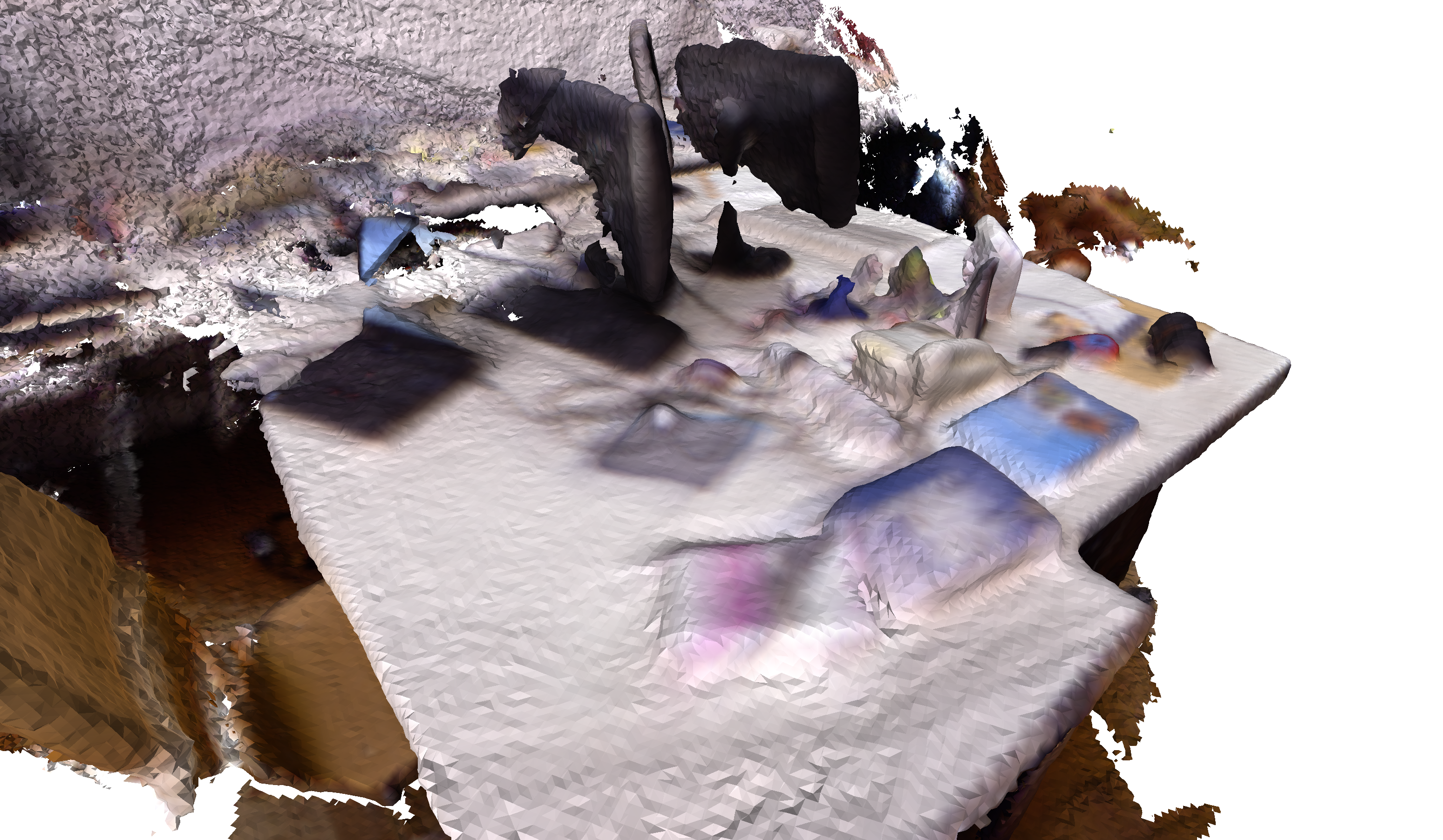

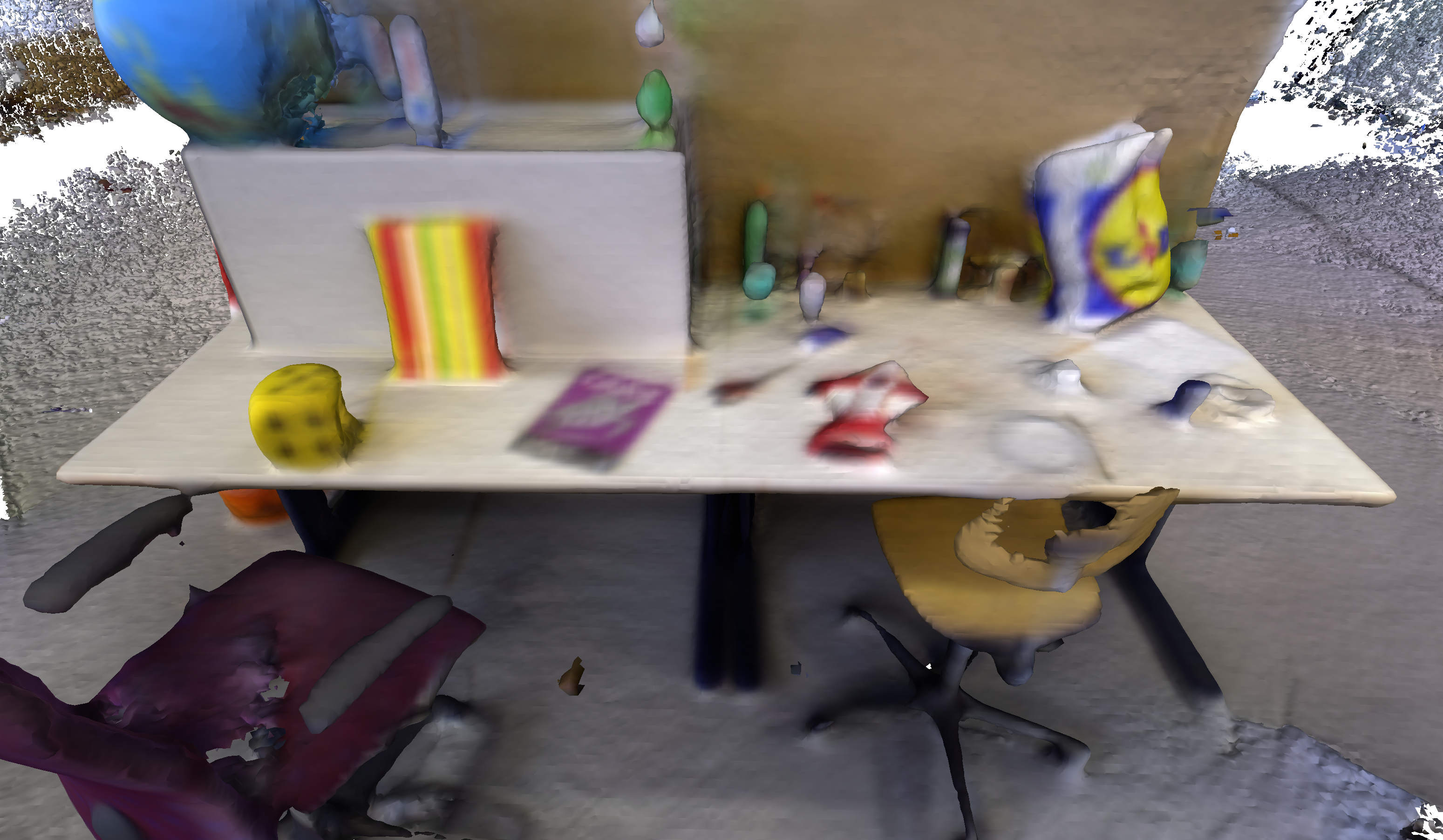

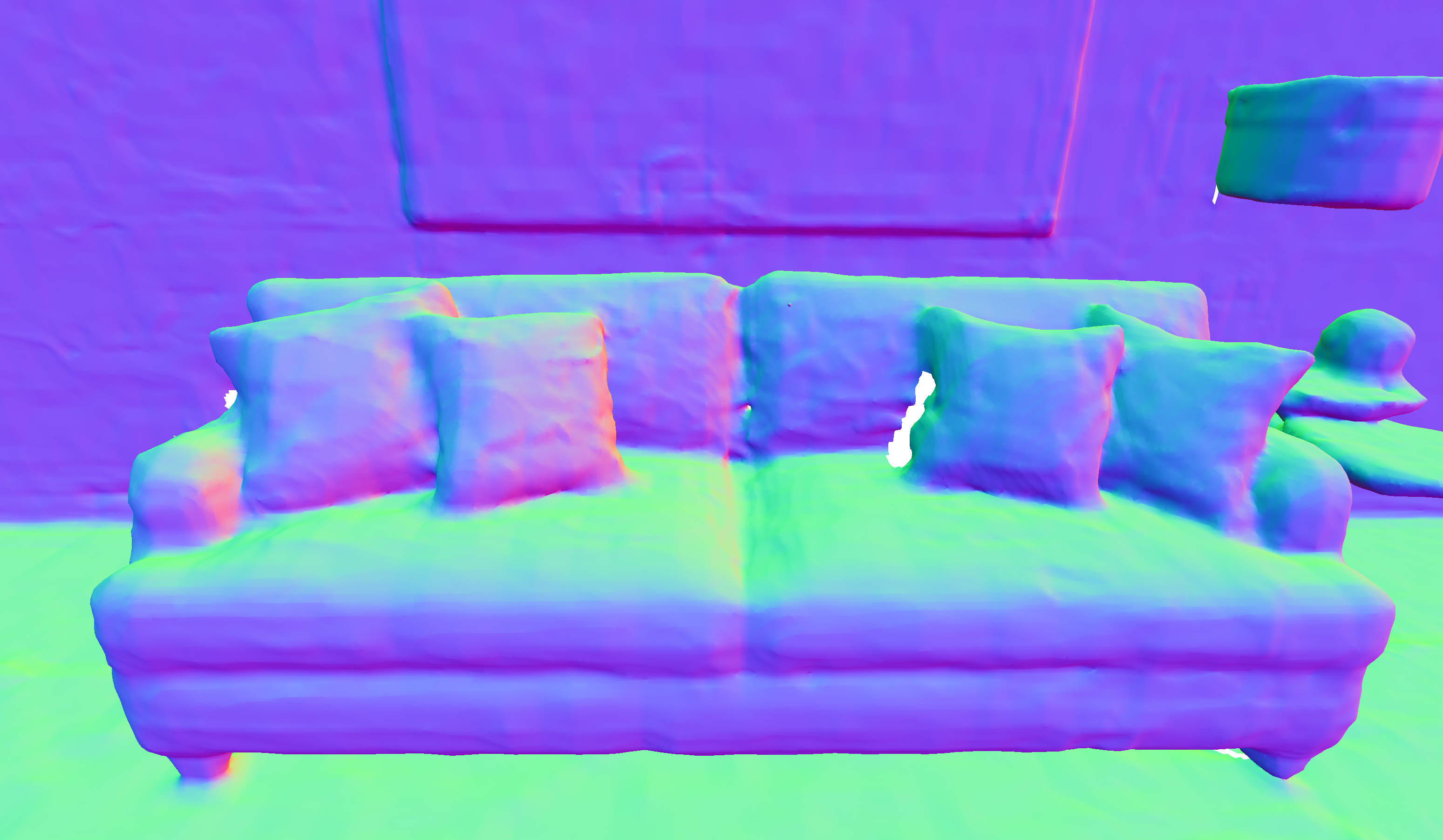

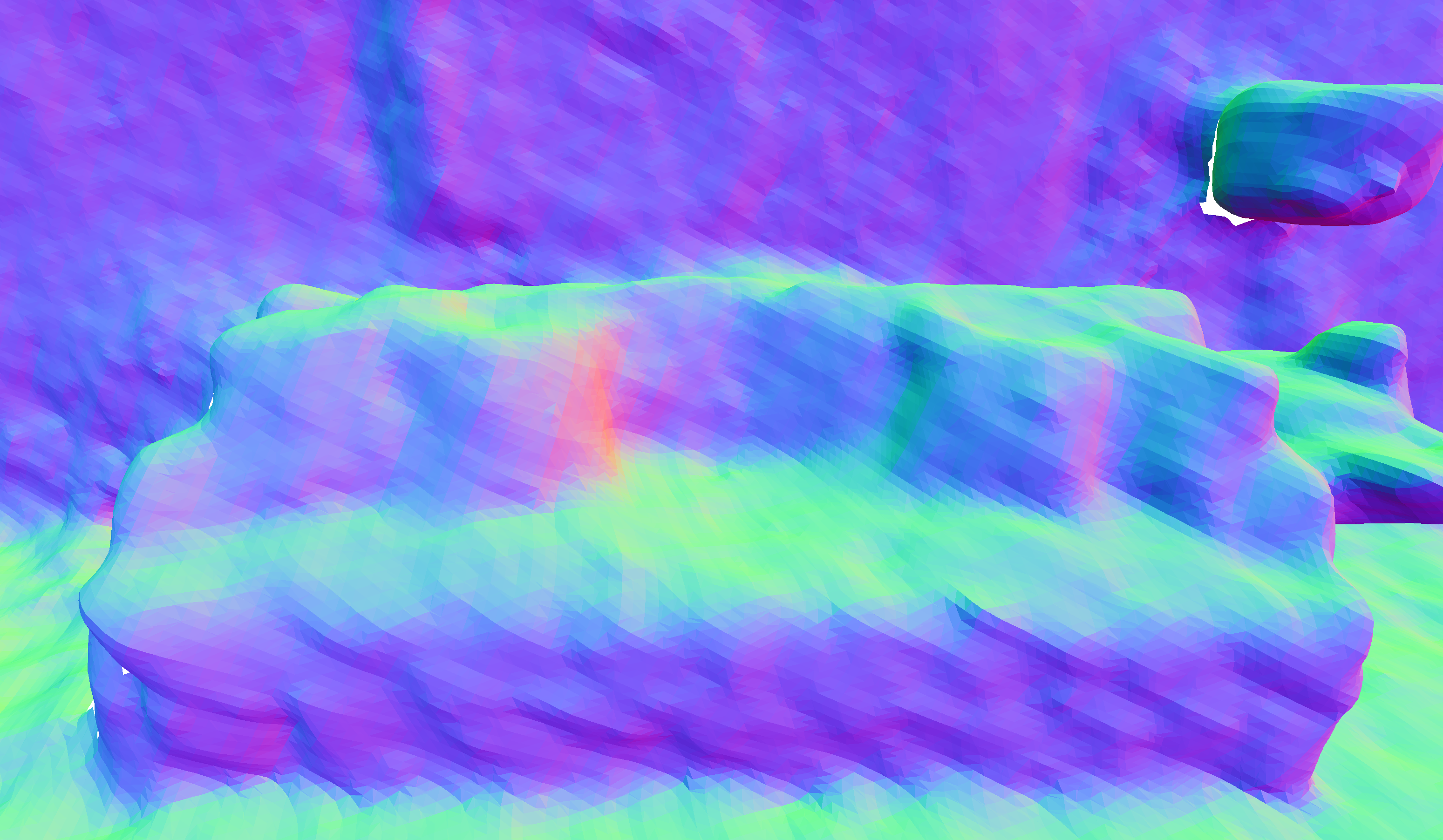

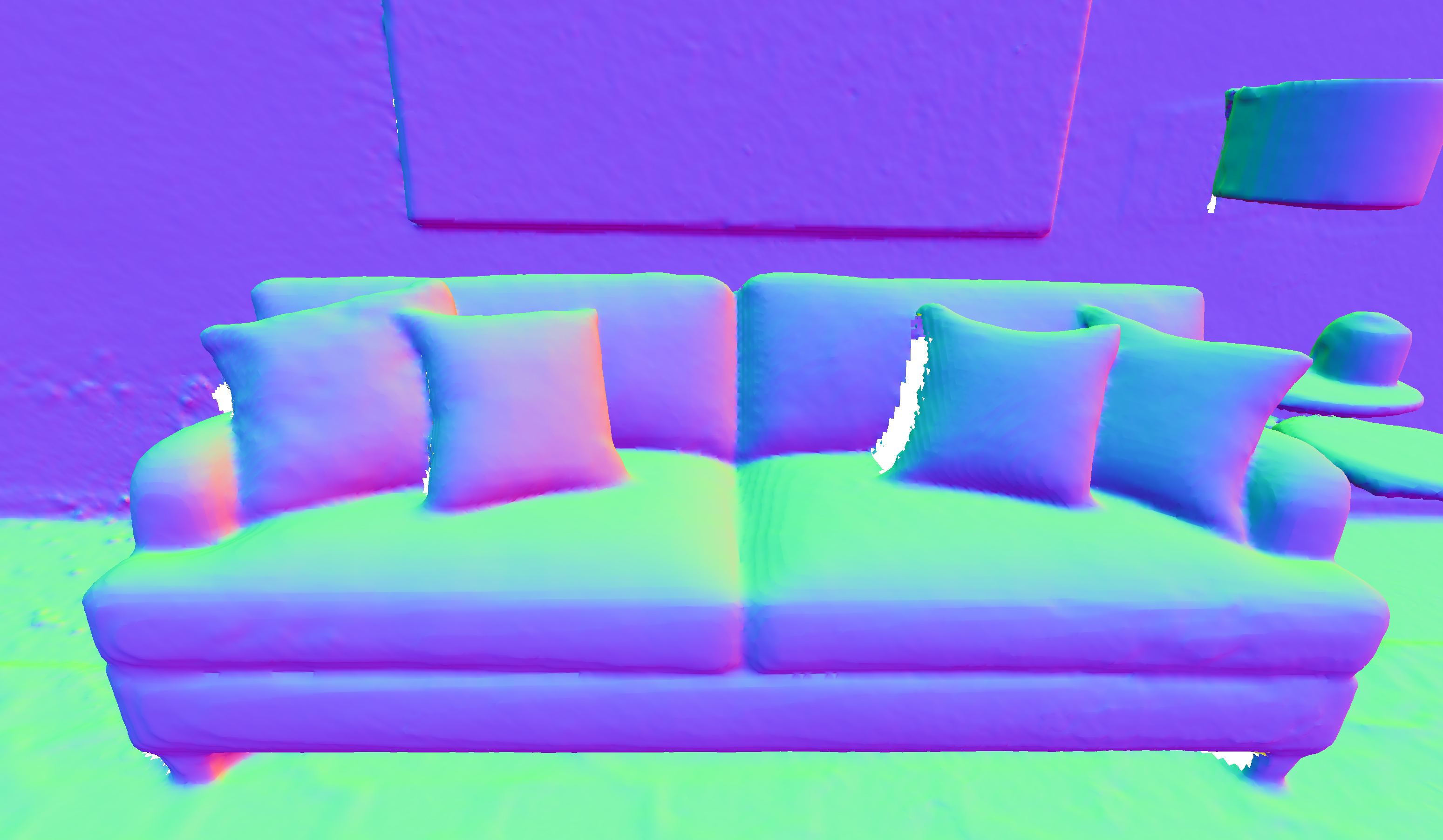

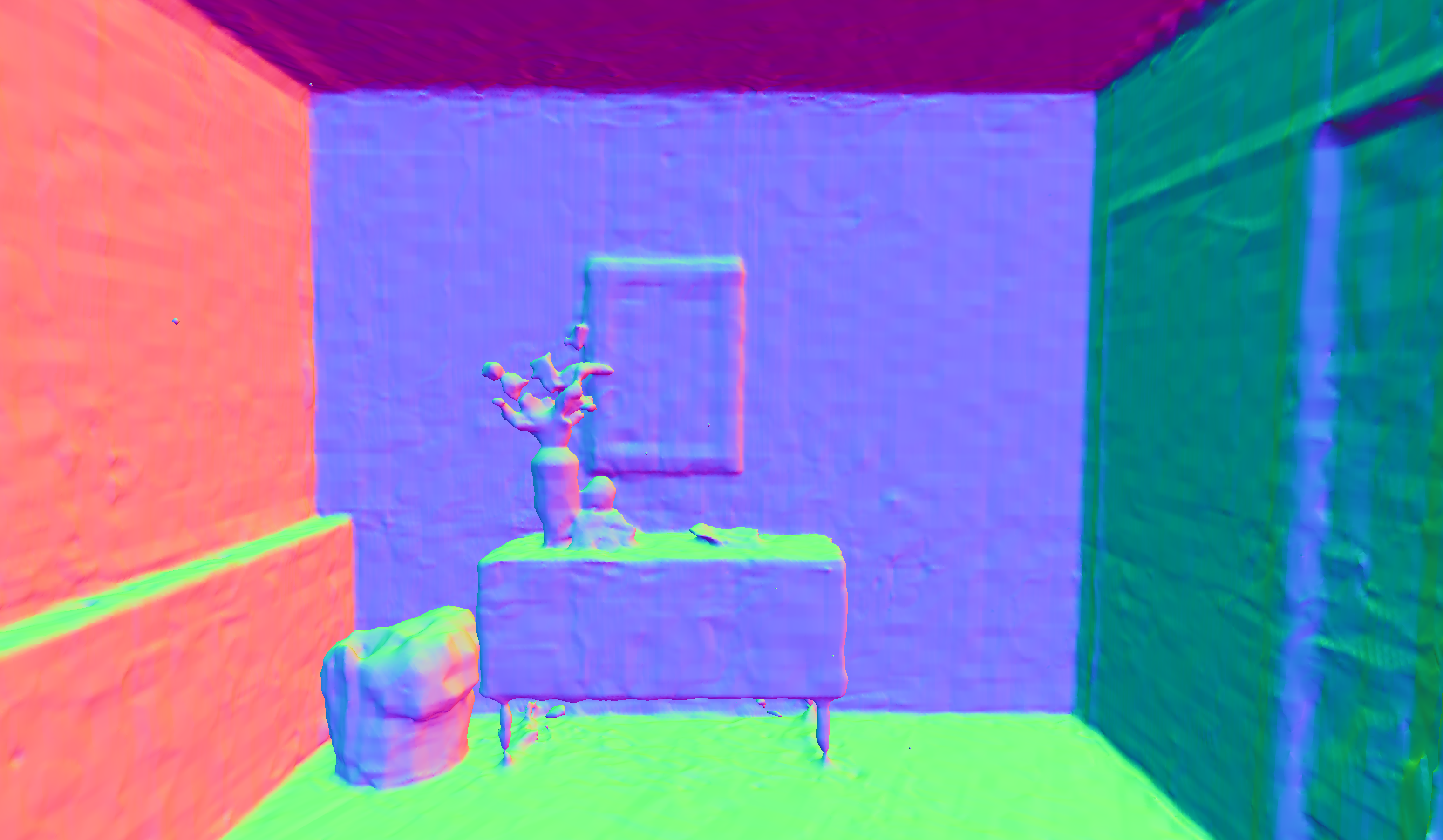

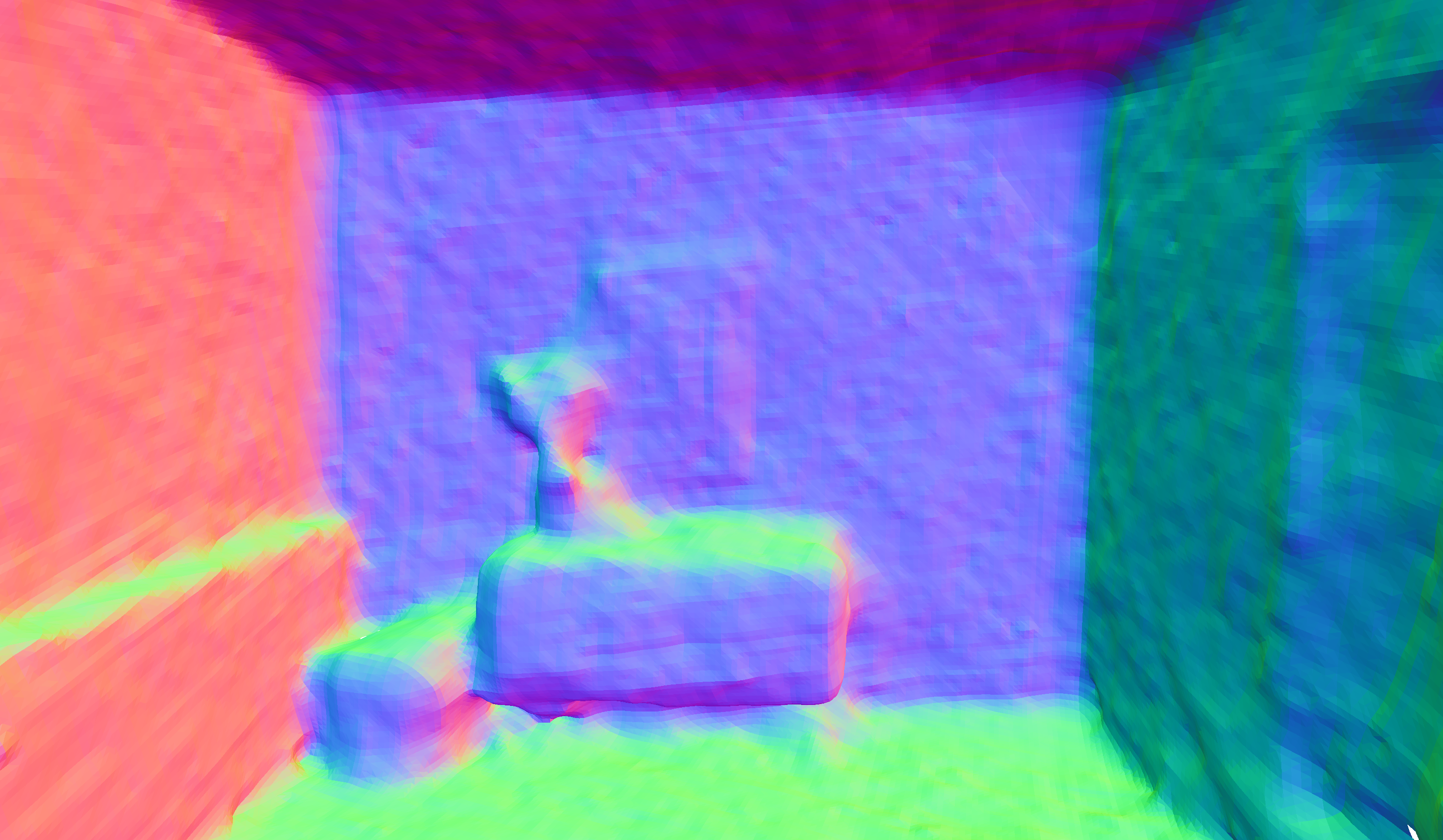

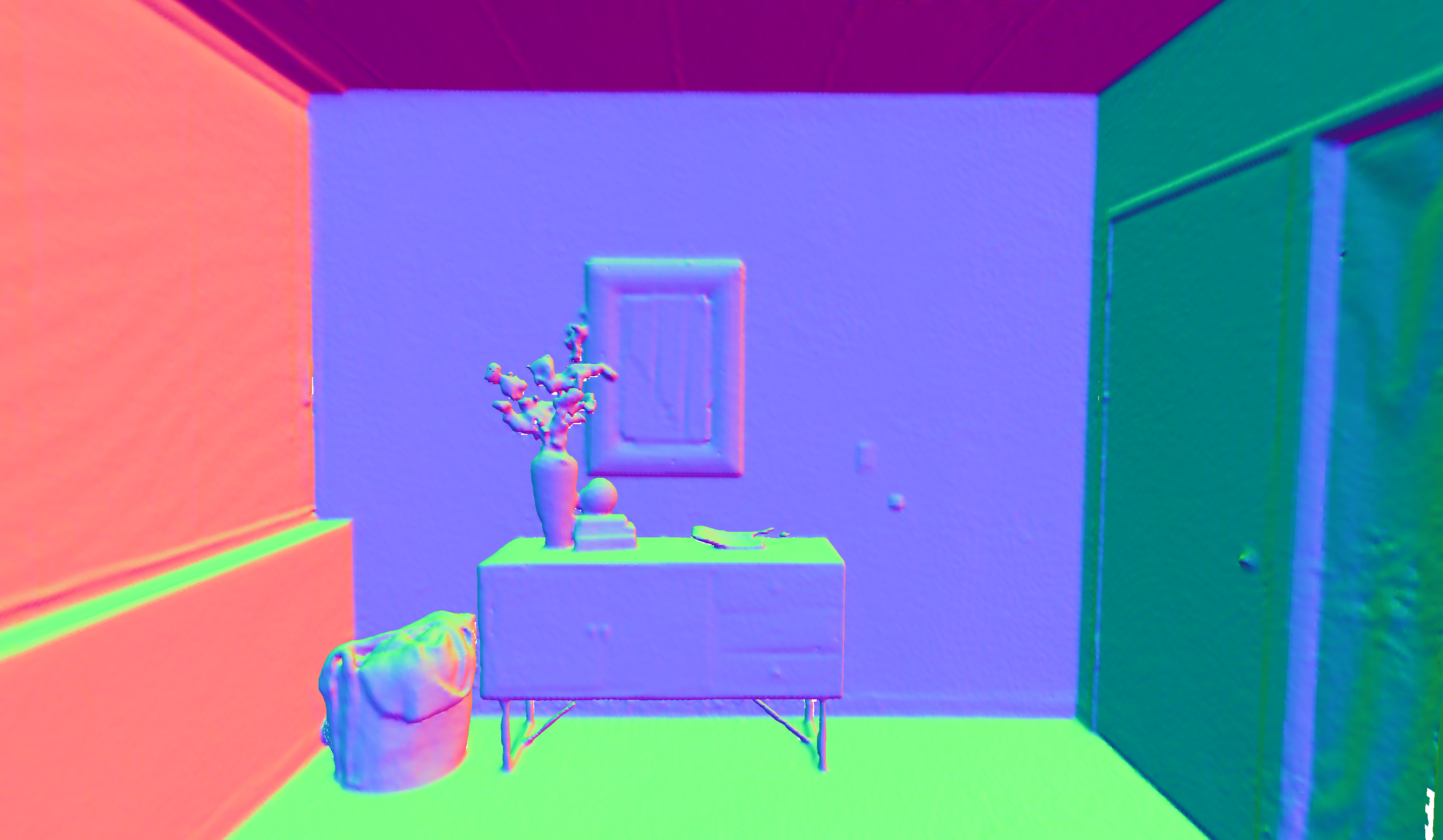

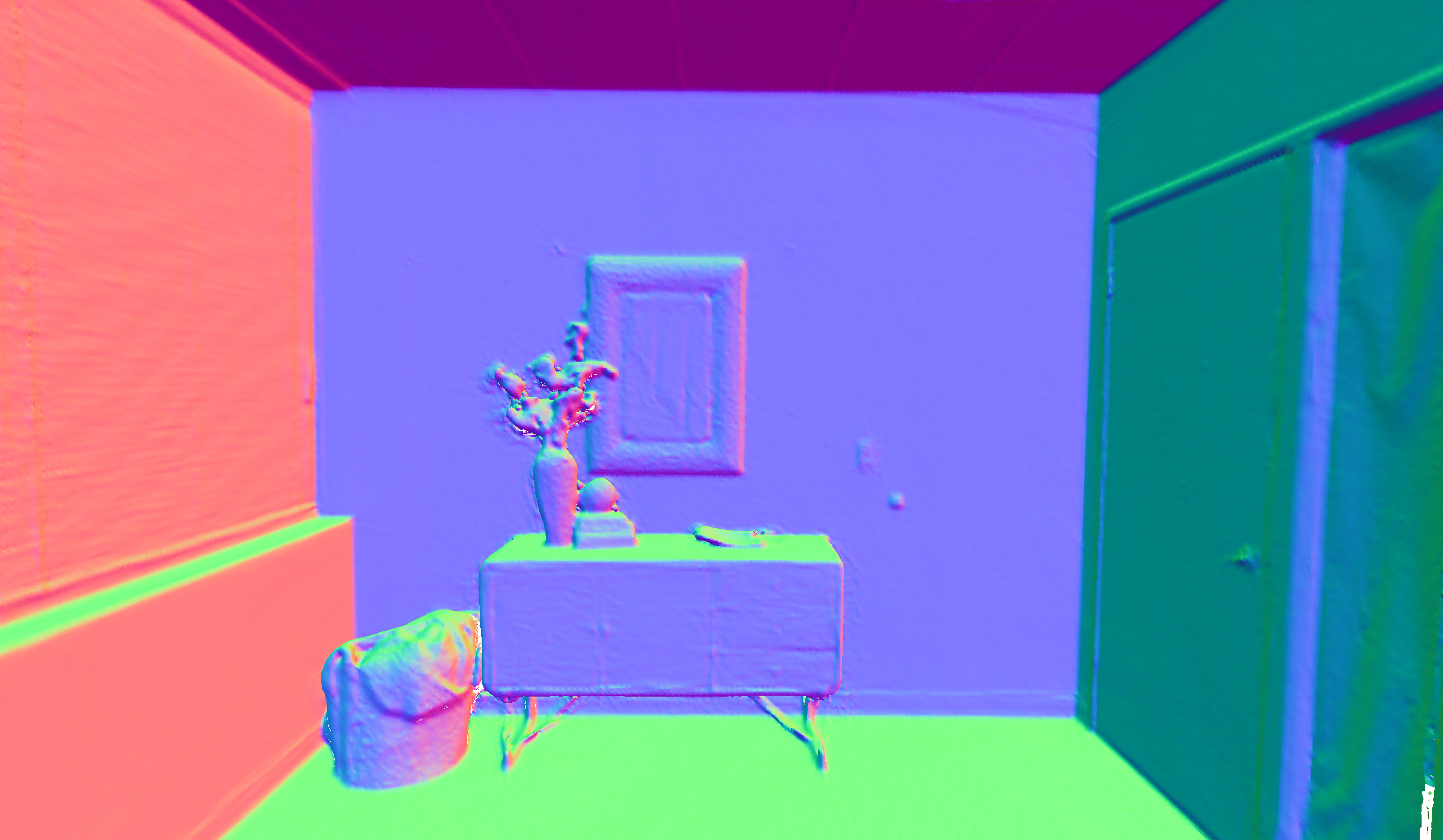

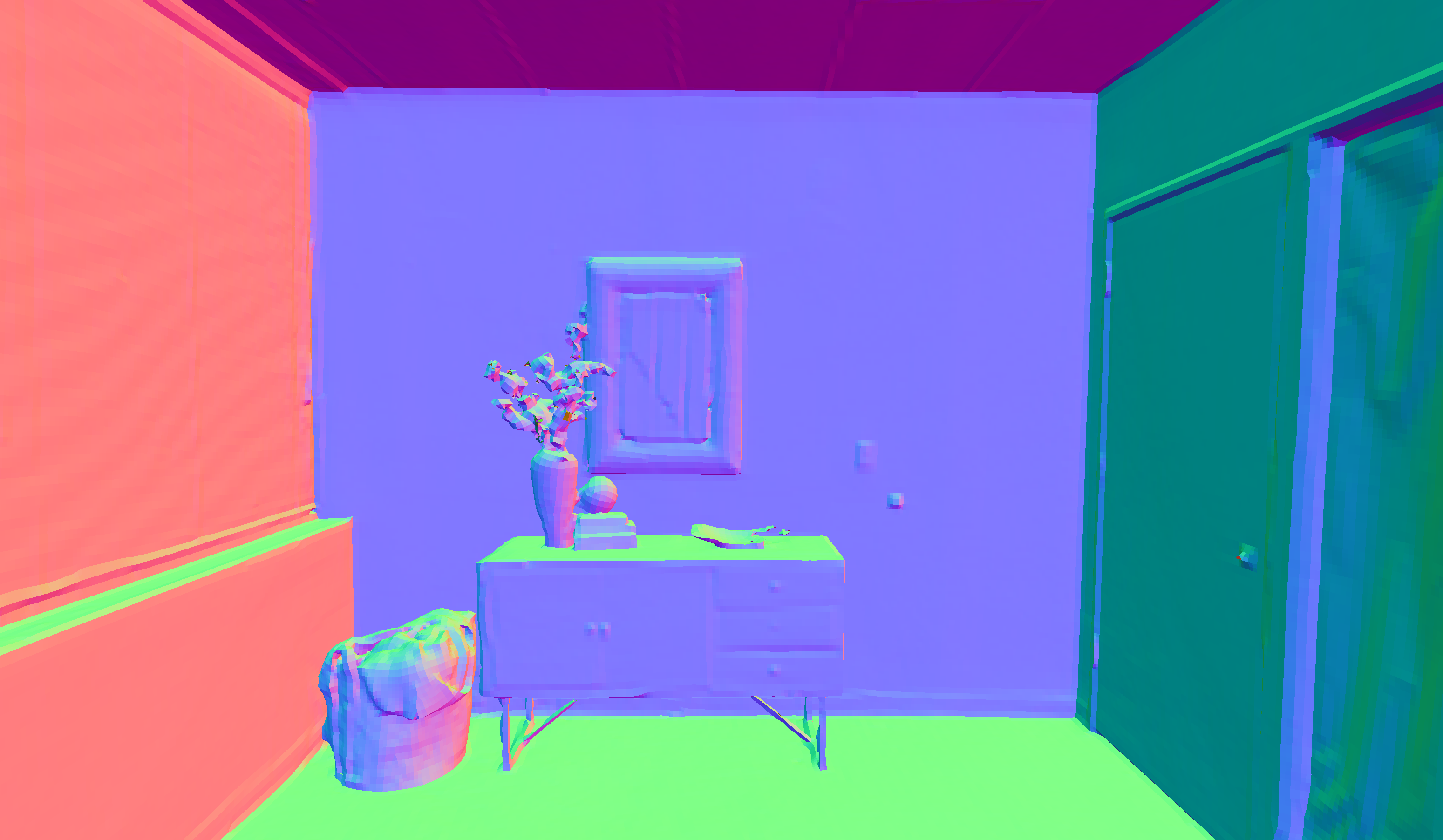

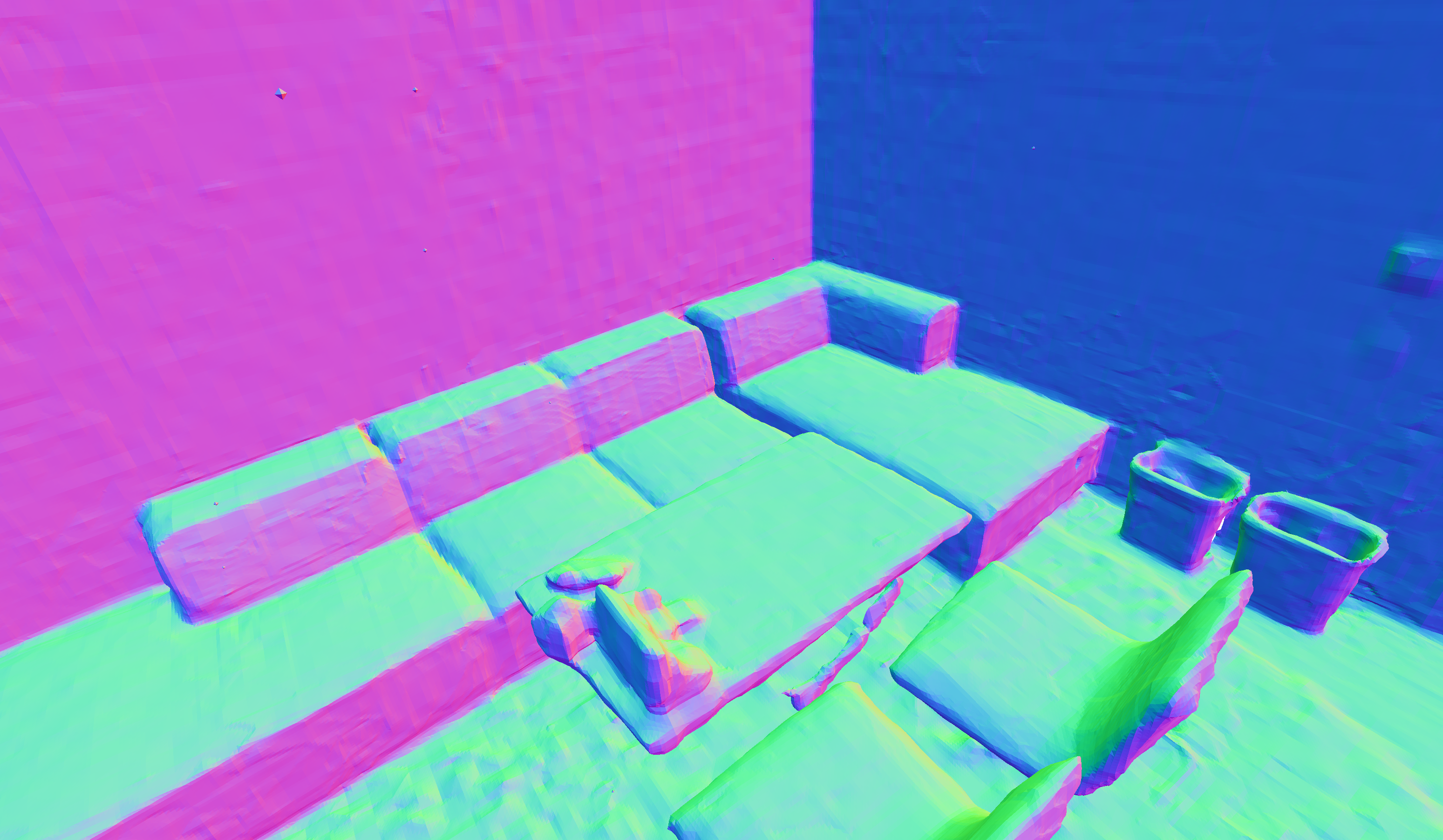

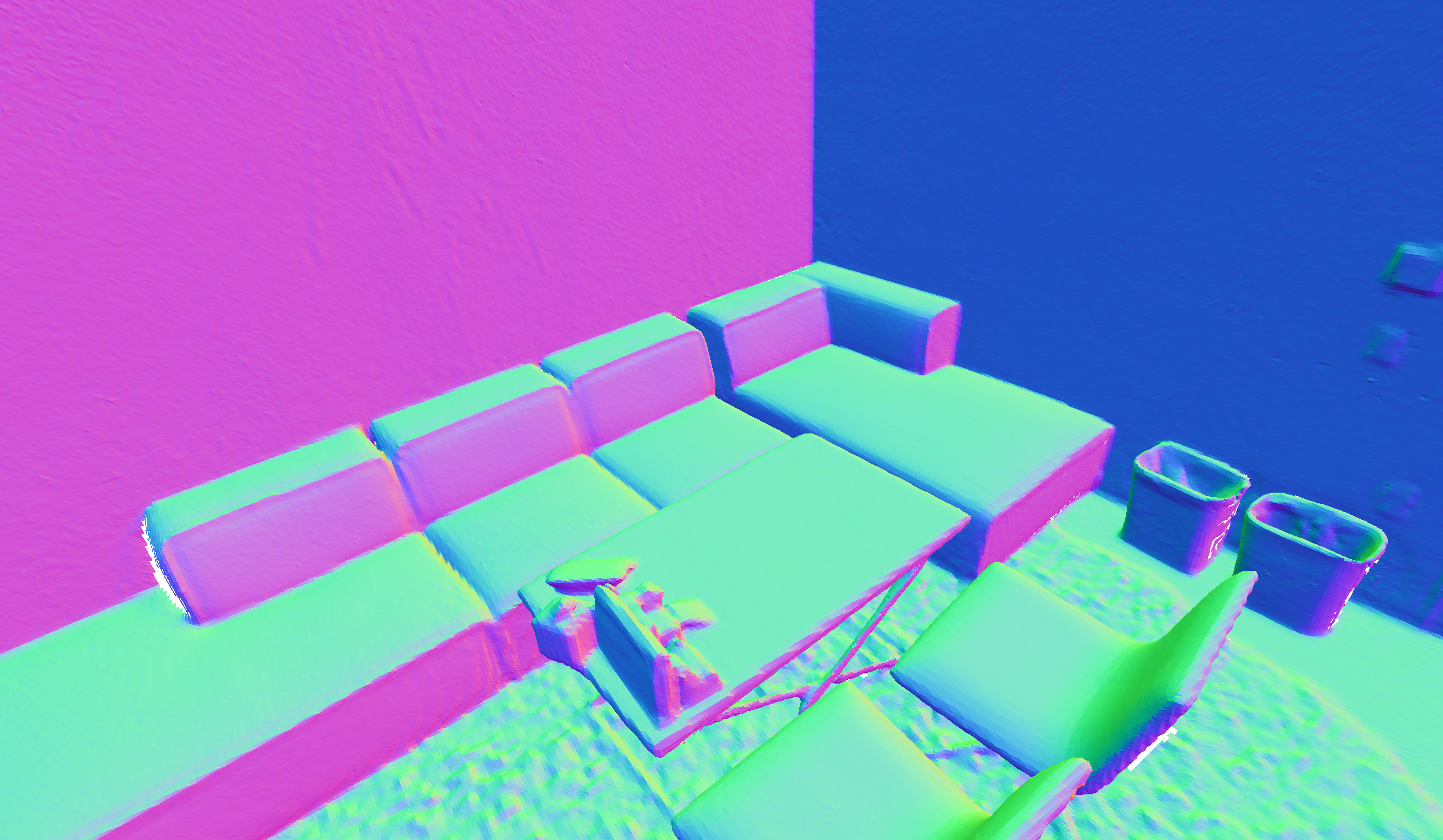

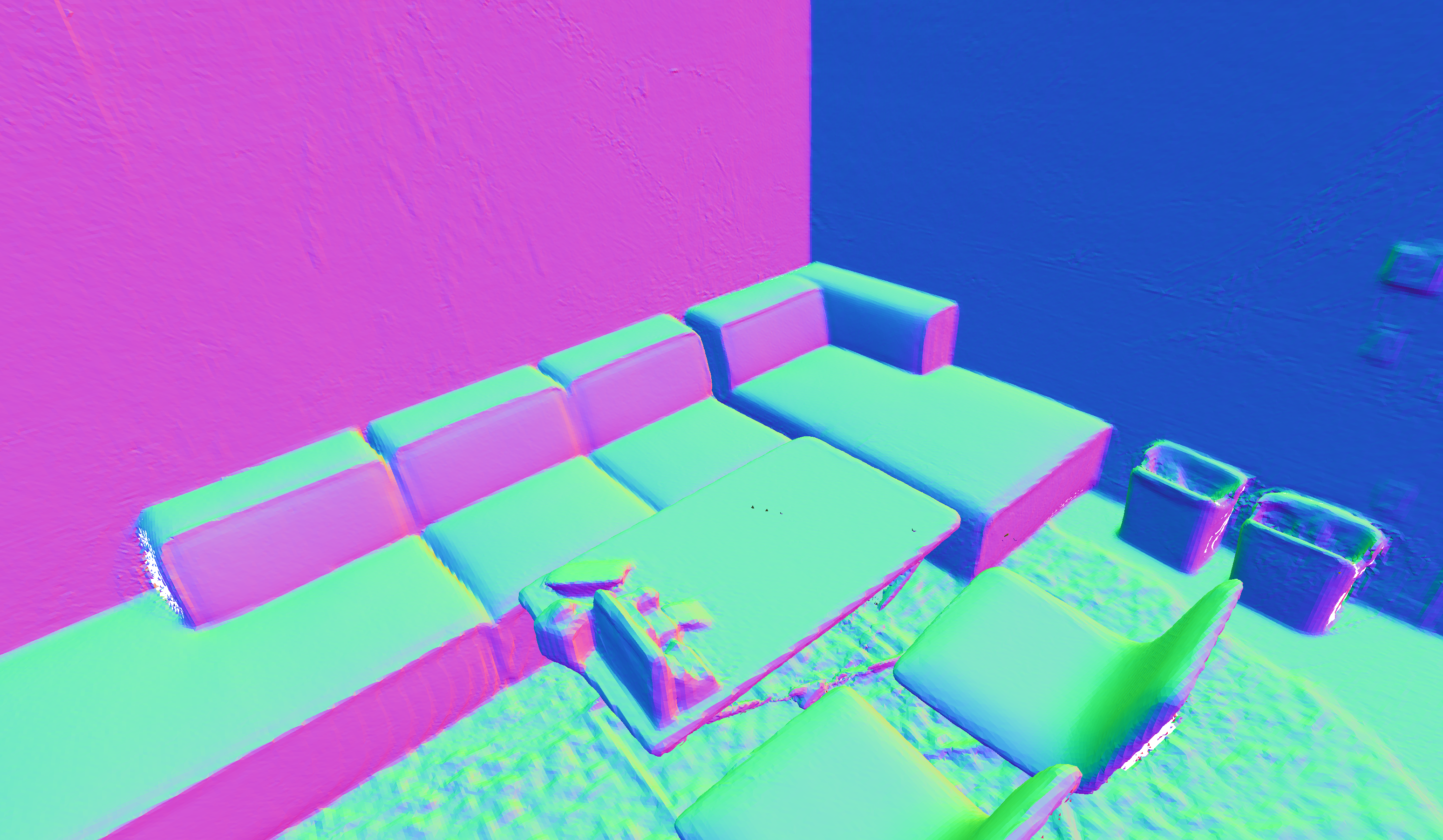

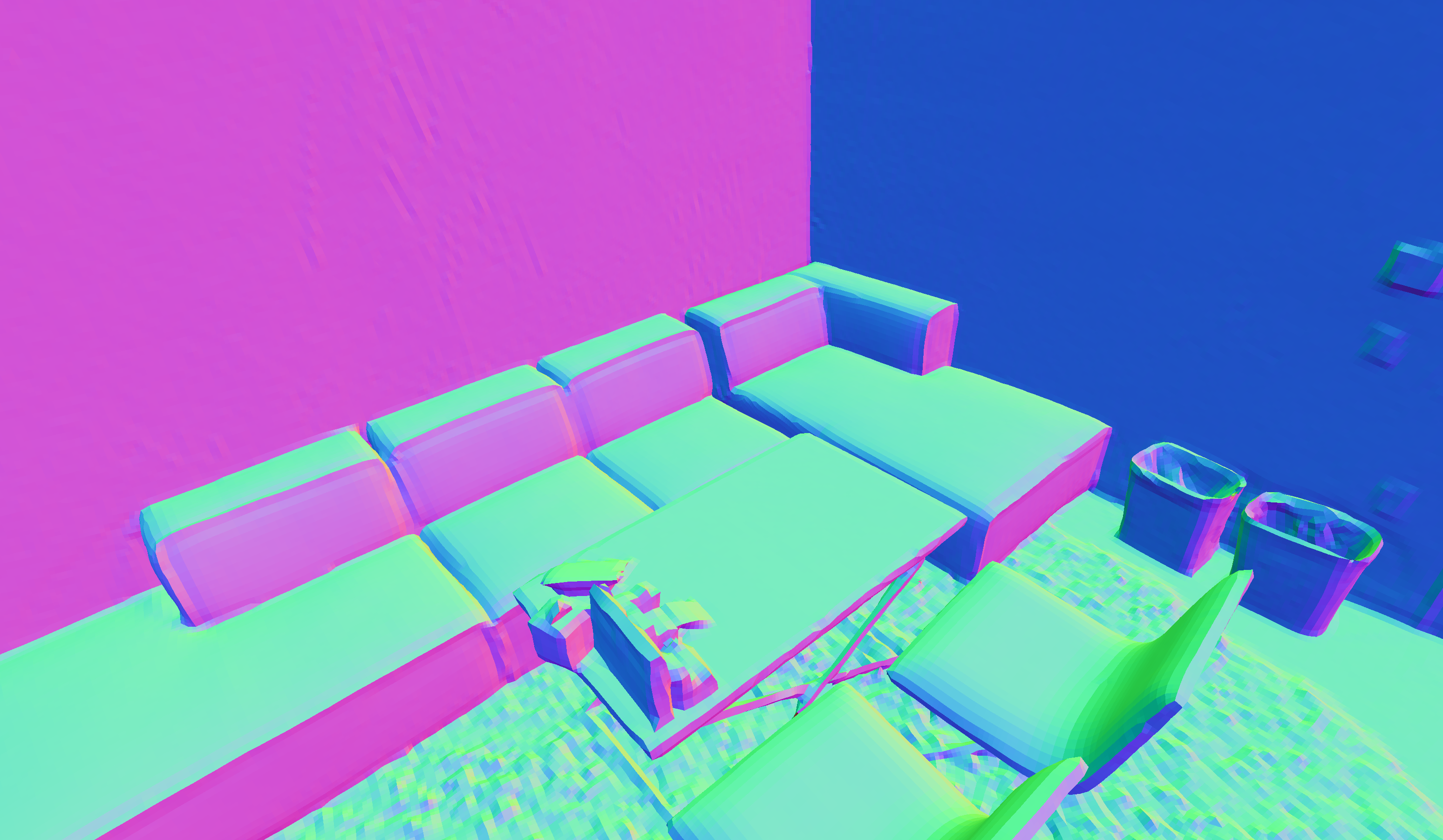

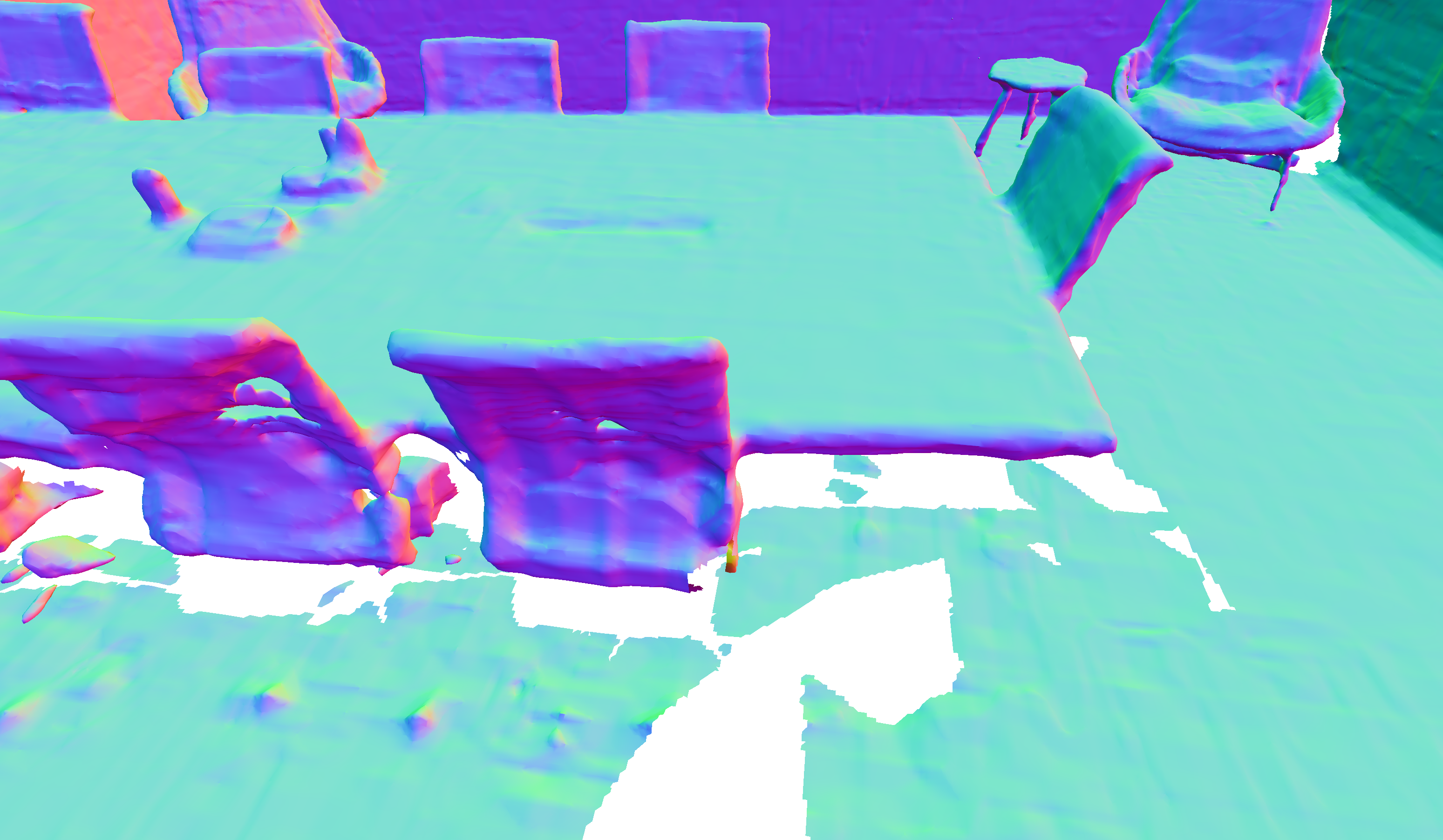

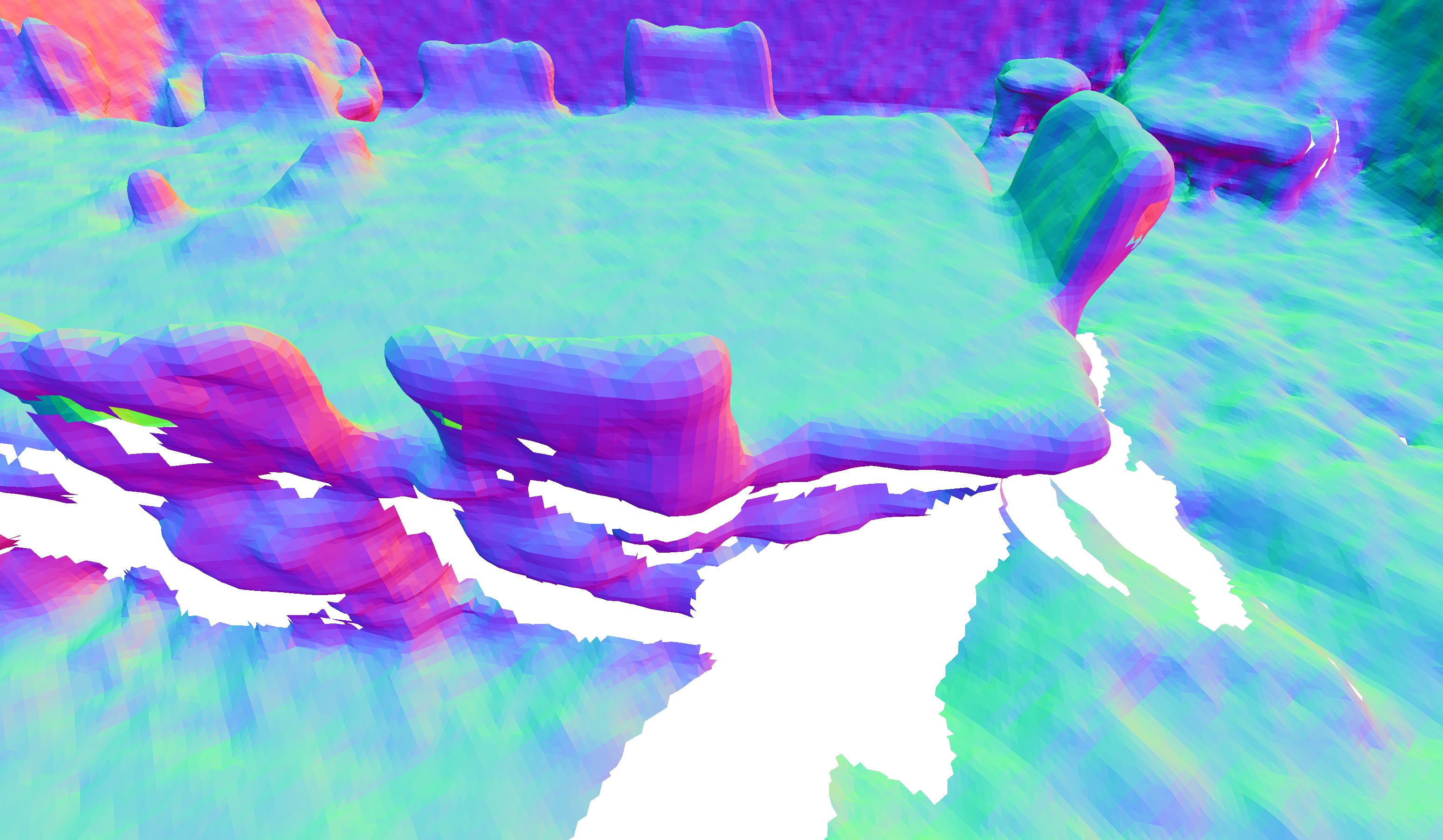

Upon receiving an input posed RGBD keyframe, it is subsampled, taking into account the color gradient. The sampled points are then projected into 3D space, where new Gaussians are initialized with their means at these sampled locations. These new 3D Gaussians are added to the currently active segment of the global map within the sparse regions. The input RGBD keyframe is temporarily stored alongside other keyframes that have contributed to the active sub-map. Once the new Gaussians have been integrated into the active sub-map, all keyframes contributing to the active sub-map are rendered. Subsequently, the depth and color losses are computed w.r.t. to the sub-map input keyframes. Following this, we update the parameters of the 3D Gaussians in the active sub-map. This process is repeated for a fixed number of iterations.